Imagine a 3D artist meticulously hand-painting a single realistic wood texture for hours, or even days. Now, picture that same artist generating dozens of unique, photorealistic variations in a matter of minutes. This is no longer a concept from science fiction; it's the new reality powered by AI texture generation.

The New Frontier of Digital Artistry

AI texture generation is not about replacing artists. It's about providing them with a powerful collaborator—a digital assistant trained on a world of visual data, ready to generate nearly any material you can describe in simple text.

This shift is fundamentally reshaping creative workflows in gaming, film, and architectural visualization. What once required hours of painstaking manual labor can now be achieved in a fraction of the time, freeing up artists to focus on higher-level creative direction and execution.

Accelerating the Creative Process

The most significant advantage is speed. Artists can rapidly prototype ideas, experiment with different material aesthetics, and build extensive libraries of unique assets without starting from scratch each time. This allows for more iteration and refinement, which consistently leads to a higher-quality final product.

For studios and creative teams, this translates directly into faster production cycles and greater creative flexibility. The ability to generate bespoke textures on demand means projects are no longer constrained by the limitations of stock asset libraries or the time required for manual creation.

Think of it this way: AI texture generation gives every artist their own infinite material library. Instead of searching for the right texture, they can create it by describing their creative vision.

Key Benefits for Creative Teams

This technology is about more than just efficiency; it's about unlocking new creative possibilities. Here’s how teams are already benefiting:

- Rapid Prototyping: Quickly apply placeholder or even final-quality textures to models to visualize environments and assets. This makes it easier to establish a project's art direction early in the process.

- Endless Variation: Generate countless versions of a single material, such as different levels of wear and tear on metal or unique grain patterns in wood. This level of detail adds incredible realism to a scene.

- Enhanced Creativity: By automating the more repetitive aspects of texture creation, AI frees artists to spend more time on composition, lighting, and overall artistic vision.

One crucial point for professional and enterprise work is the source of the AI model. Partnering with a platform that uses a compliant, secure, and ethically trained model is non-negotiable. This ensures that creative freedom is built on a responsible foundation, protecting projects from potential copyright or compliance issues.

How AI Learns to Create Textures

At its core, AI texture generation is not about following a set of predefined rules. It’s about learning from vast datasets of examples, similar to an apprentice studying a comprehensive library of masterworks.

The AI model is trained on millions of images, from weathered wood and polished stone to woven fabric. It learns the subtle patterns, imperfections, and details that make each material appear realistic. This deep knowledge allows it to generate entirely new textures that are not mere copies, but original creations based on the principles it has absorbed.

The Competition That Creates Realism

One of the foundational techniques in this field is the Generative Adversarial Network, or GAN. This concept involves a clever "generator" in a constant competition with a "discriminator."

The generator's role is to create textures so convincing they can fool the discriminator. The discriminator, trained on real-world images, continuously improves at identifying artificial creations. Each time the discriminator spots a fake, the generator learns from its mistake and refines its approach. This relentless feedback loop forces the generator to produce incredibly realistic and detailed results.

The introduction of GANs in 2014 was a significant turning point for AI in the creative space. This adversarial process allowed models to learn from huge datasets, like the ImageNet database with its 14 million+ labeled images, and truly understand what makes a texture look authentic. You can discover more about the history of AI in visual art to explore its foundational concepts.

Sculpting Detail From Digital Noise

A more recent and powerful method involves diffusion models. This approach is fundamentally different. Imagine a sculptor starting not with a block of marble, but with a dense cloud of digital static, or "noise." Their task is to methodically carve away that noise to reveal a detailed and coherent image hidden within.

Diffusion models work by learning this process in reverse. They are trained to undo the gradual addition of noise to a clean image. When given a text prompt like "cracked desert ground," the model starts with pure static and refines it step-by-step, removing the noise in a way that aligns with the description until a high-fidelity texture emerges.

This progressive refinement is why diffusion models offer such incredible detail and creative control. It allows for a level of nuance and complexity that is difficult to achieve with other methods, making it the preferred approach for high-quality creative work.

Comparing AI Texture Generation Models

GANs and Diffusion Models are both powerful technologies, but each has strengths and weaknesses that make it better suited for certain tasks. Choosing the right model is like selecting the appropriate tool for a specific job.

The table below breaks down the key differences to help you understand which model might be the best fit for your project's needs.

Ultimately, both technologies are incredibly capable, but diffusion models have become the preferred choice for artists and studios that require maximum quality and creative control over their final textures.

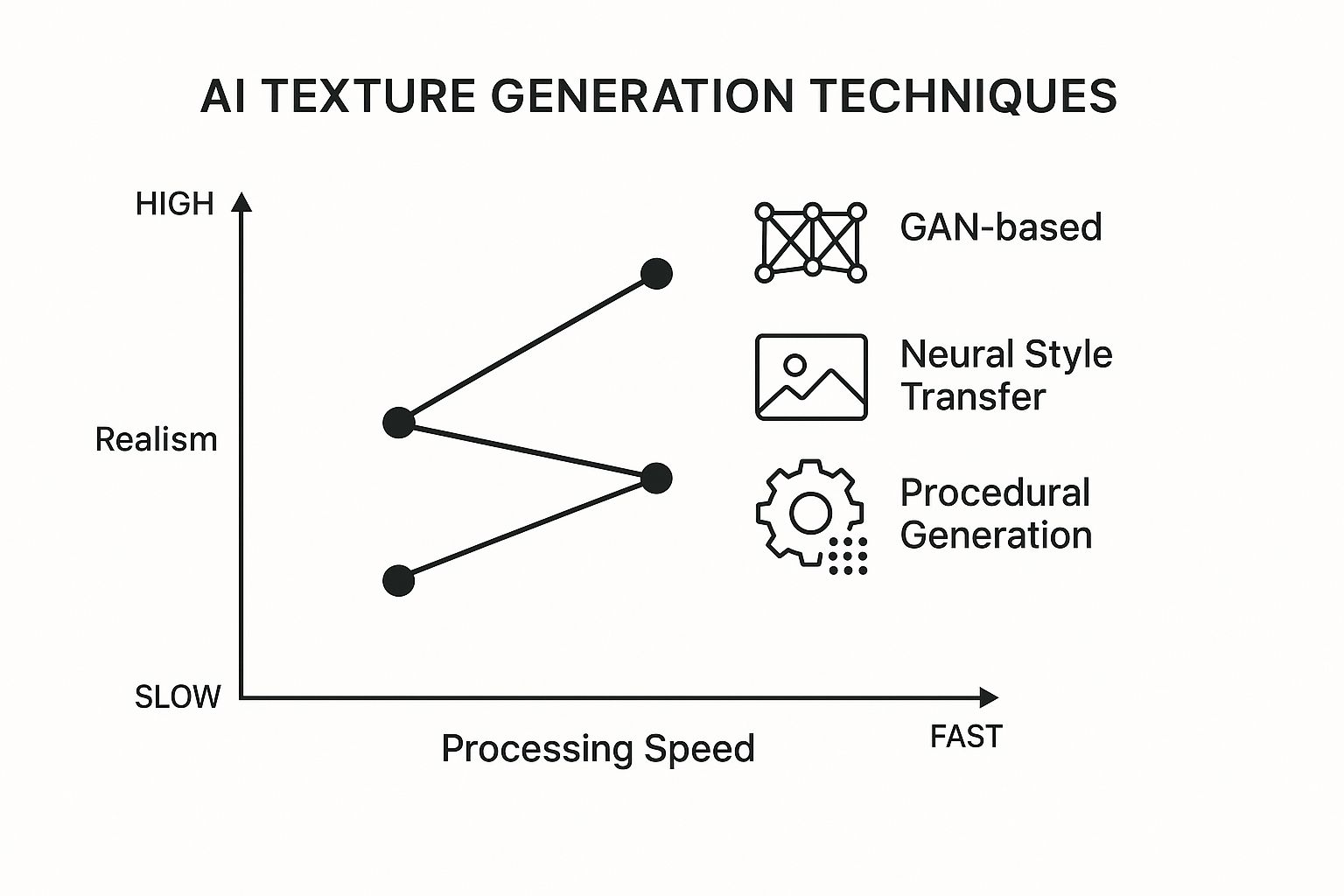

As the chart shows, there is often a trade-off. Some techniques are very fast but may not deliver the required realism. GANs, however, strike a solid balance between the two. Understanding these core technologies helps clarify how a simple text description can be transformed into a visually stunning material, ready for any 3D project.

The Evolution from Theory to Creative Tool

The journey of AI texture generation from a niche academic concept to an essential part of a digital artist's toolkit has been remarkably swift. For a long time, generating images from text was confined to research labs, with early models producing blurry, abstract visuals unsuitable for professional creative work.

The landscape changed with the arrival of powerful, large-scale models. Trained on massive libraries of images and text, these systems began to understand the deep connections between descriptive words and the pixels that form an image. This was the breakthrough that paved the way for the intuitive "text-to-texture" tools available today, removing the barrier between a creative idea and a finished asset.

The Models That Changed Everything

The release of DALL-E in 2020 was a significant milestone. This model, with 12 billion parameters, could create surprisingly coherent and creative images from simple text prompts. However, the true game-changer for many artists and studios came in 2022 with the launch of Stable Diffusion by Stability AI. It was a diffusion-based model capable of producing photorealistic textures and images at high resolutions from a text prompt. You can learn more about the key moments in AI's creative timeline to see how these developments fit together.

The key catalyst was Stability AI's decision to release the model as open source. This move democratized a technology previously accessible only to large research organizations.

The open-source movement put state-of-the-art AI texture generation directly into the hands of independent artists, small studios, and large enterprises alike, sparking a global wave of creative innovation.

Reshaping Modern Creative Workflows

This newfound accessibility has transformed creative workflows across industries. Artists no longer need to spend hours searching stock photo libraries or days building a single material from scratch.

- In Game Development: Studios use AI for rapid prototyping. A level designer can generate dozens of variations for a "grimy sci-fi metal floor" or an "overgrown jungle vine" in minutes, not days.

- In Film and VFX: Visual effects artists can generate hyper-detailed surfaces for digital props and environments. Creating a specific "pitted, iridescent alien rock" texture is now achievable almost instantly.

- In Architectural Visualization: Designers can generate specific wood grains, concrete finishes, or fabric patterns on demand, perfectly matching a client's vision without an extensive search.

This shift has not only accelerated timelines but has also unlocked a level of creative experimentation that was previously impractical. The ability to iterate on visual ideas at the speed of thought has become a significant competitive advantage, allowing creative teams to push boundaries and achieve a higher quality bar more efficiently. This rapid evolution has cemented AI texture generation as a cornerstone of modern digital content creation.

Integrating AI Textures into Your 3D Workflow

Generating a compelling image is just the first step. The real value is realized when you integrate that asset into your professional pipeline and turn an idea into a functional, project-ready material. This process begins with the text prompt—your primary means of directing the AI.

Effective prompting requires more than simple descriptions. Instead of just “stone wall,” think like an artist. A prompt such as “ancient mossy cobblestone wall, wet from recent rain, detailed 4K, photorealistic” provides the specific context the model needs to deliver a high-quality result. Selecting the right tool is also important. If you're exploring options, see our guide on the best AI for image creation.

Preparing Textures for 3D Software

Once you have an image, the next step is preparing it for software like Blender or Maya, and game engines like Unreal Engine or Unity. A critical part of this process is ensuring the texture is seamless and tileable.

A seamless texture can be repeated across a large surface—like a floor or a brick wall—without any visible edges or breaks in the pattern. For most 3D work, this is essential. Fortunately, many modern ai texture generation platforms have built-in features to create tileable outputs directly, saving considerable manual cleanup work.

The ability to generate tileable textures on demand eliminates a major bottleneck in environment art. It allows artists to cover massive game levels or architectural scenes with unique, high-resolution materials that repeat flawlessly.

From Flat Image to PBR Material

A flat color image, often called an albedo or diffuse map, is only one component of a realistic material. To create a material that interacts with light correctly, you need a full set of Physically Based Rendering (PBR) maps. These additional maps inform the 3D engine how the surface should behave.

This is another area where AI-powered tools offer a significant speed advantage. The key PBR maps include:

- Normal Map: Adds the illusion of surface detail, like bumps and cracks, without increasing the model's polygon count.

- Roughness Map: Controls how rough or smooth a surface is, determining whether reflections are sharp (like polished metal) or scattered (like concrete).

- Metallic Map: A simple black-and-white map that defines which parts of the material are metallic.

- Ambient Occlusion (AO) Map: Simulates the soft contact shadows in creases and crevices, adding a sense of depth and realism.

Specialized software can now take your initial AI-generated color texture and intelligently produce all of these corresponding PBR maps. This workflow transforms a simple 2D image into a fully functional 3D material, ready to be applied to any model. This tight integration of ai texture generation and PBR map creation is a game-changer for the material authoring process.

Real-World Applications of AI Texturing

AI texture generation is no longer a future concept—it is already delivering tangible value across creative industries. This is a proven, commercially viable tool that studios and digital artists are using right now to accelerate their work and push creative boundaries.

The technology fundamentally changes how artists approach their work. Instead of spending hours searching for the right asset in a library, they can create it on demand. This makes the entire creative process more fluid and iterative.

Accelerating Game Development

In the fast-paced world of game development, efficiency is crucial. Studios are now using AI to rapidly prototype entire environments, generating dozens of material variations in the time it once took to create one by hand. This leads to faster art direction decisions and more polished final worlds.

The process of creating variations of character assets—such as worn leather armor or unique alien skin patterns—is now significantly streamlined. This reduces production time and costs, enabling both large AAA studios and small indie teams to achieve a higher level of detail. The impact on creating immersive 3D gaming backgrounds is particularly significant, as artists can fill massive worlds with unique, non-repeating materials.

AI texture generation acts as a creative multiplier, giving artists the freedom to experiment with countless visual ideas without the traditional time constraints. This is how groundbreaking and memorable game worlds are developed.

The commercial impact is clear. As these tools become more integrated, production costs decrease by automating what was previously labor-intensive asset creation. In fact, market analysts predicted that by 2024, over 40% of digital content creators would be using AI-assisted texture tools to accelerate their visual development. If you're interested in the journey to this point, you can read more about AI's history in image generation.

Pushing Boundaries in Film and Architecture

The benefits extend beyond gaming. In architectural visualization, designers can now generate hyper-specific materials directly from a client’s description—a certain grain of reclaimed oak, a unique concrete finish, or a custom fabric pattern. This ensures the final render perfectly matches the intended vision.

In film and VFX, artists use AI texture generation to create incredibly detailed surfaces for digital props, characters, and environments. Crafting a complex, weathered spaceship hull or the intricate scales on a mythical creature once required weeks of painstaking manual work. Now, it can be accomplished in a fraction of the time.

This technology doesn't just accelerate timelines; it frees up artists to focus their expertise on composition, lighting, and storytelling—the areas where their creative input matters most. AI texturing is no longer a novelty; it's becoming a core component of the modern creative toolkit.

Common Questions About AI Texture Generation

As AI texture generation becomes a standard part of the creative toolkit, practical questions naturally arise. Understanding the technology's capabilities, limitations, and legal considerations is essential for professional use.

Let's address some of the most common questions from artists and studios to provide the clarity needed to use these powerful tools responsibly in a professional environment.

Can AI Generate Seamless and Tileable Textures?

Yes, absolutely. Most professional-grade AI texture platforms now include features specifically for creating seamless textures that tile perfectly. This is a critical requirement for game development and architectural visualization, where large surfaces like floors, walls, or terrain must be covered without visible seams.

If a chosen tool lacks a one-click option, artists can use standard post-processing techniques in software like Adobe Photoshop or other tiling utilities to blend the edges and create a flawless, repeating pattern.

What Are the Copyright Implications of AI Textures?

This is a critical consideration for any enterprise. The legal landscape for AI-generated content is still evolving, but for now, usage is governed by the terms of service of the platform you use. While many services grant commercial rights to the images you create, that is only the starting point for professional work.

The primary risk for businesses lies in the data used to train the AI model. It is essential to partner with a provider that guarantees its training data is ethically sourced and commercially safe. This is what protects your projects from potential copyright claims.

Always review the licensing terms carefully before incorporating any AI-generated asset into a commercial project. For professional studios, this is not just a suggestion—it is a matter of essential due diligence.

How Do I Create PBR Material Maps from an AI Texture?

Transforming a single AI-generated image—the base color or albedo map—into a complete Physically Based Rendering (PBR) material is a standard part of modern 3D pipelines. Fortunately, this process is now largely automated.

Tools like Adobe Substance 3D Sampler or the open-source Materialize can take a base image and intelligently generate the other necessary maps by analyzing its color and light information. This typically includes:

- Normal Map: Adds fine surface details and simulates depth.

- Roughness Map: Controls how light reflects off the surface, from matte to glossy.

- Metallic Map: Defines which parts of the material are metallic.

- Ambient Occlusion Map: Creates soft, realistic contact shadows.

In just a few clicks, you can convert a flat image into a rich, realistic material ready for any modern 3D engine.

Is AI Generation Different from Procedural Generation?

While they can produce similar results, they operate on fundamentally different principles.

Procedural generation uses mathematical algorithms and parameters defined by a human to create content. For example, a procedural brick wall is built from strict instructions regarding brick size, mortar thickness, and color variation. It is highly controllable but can sometimes feel rigid because it is limited by those initial rules.

AI texture generation, in contrast, learns by analyzing millions of real-world images. It intuits the patterns, styles, and organic details that make a texture look authentic. It does not follow a predefined script but develops an understanding of visual principles. This allows AI to create more nuanced, varied, and photorealistic textures that would be nearly impossible to define with procedural algorithms alone.

While AI is a game-changer for textures, its role is expanding across the entire 3D pipeline. To see how, check out our guide on AI 3D model generation from text and images.

Ready to bring a secure, enterprise-ready AI solution into your creative pipeline? Virtuall provides a powerful platform for generating 3D assets quickly and collaboratively. Explore Virtuall and accelerate your workflow today.